A predictive performance model for immersive interactions in Mixed Reality

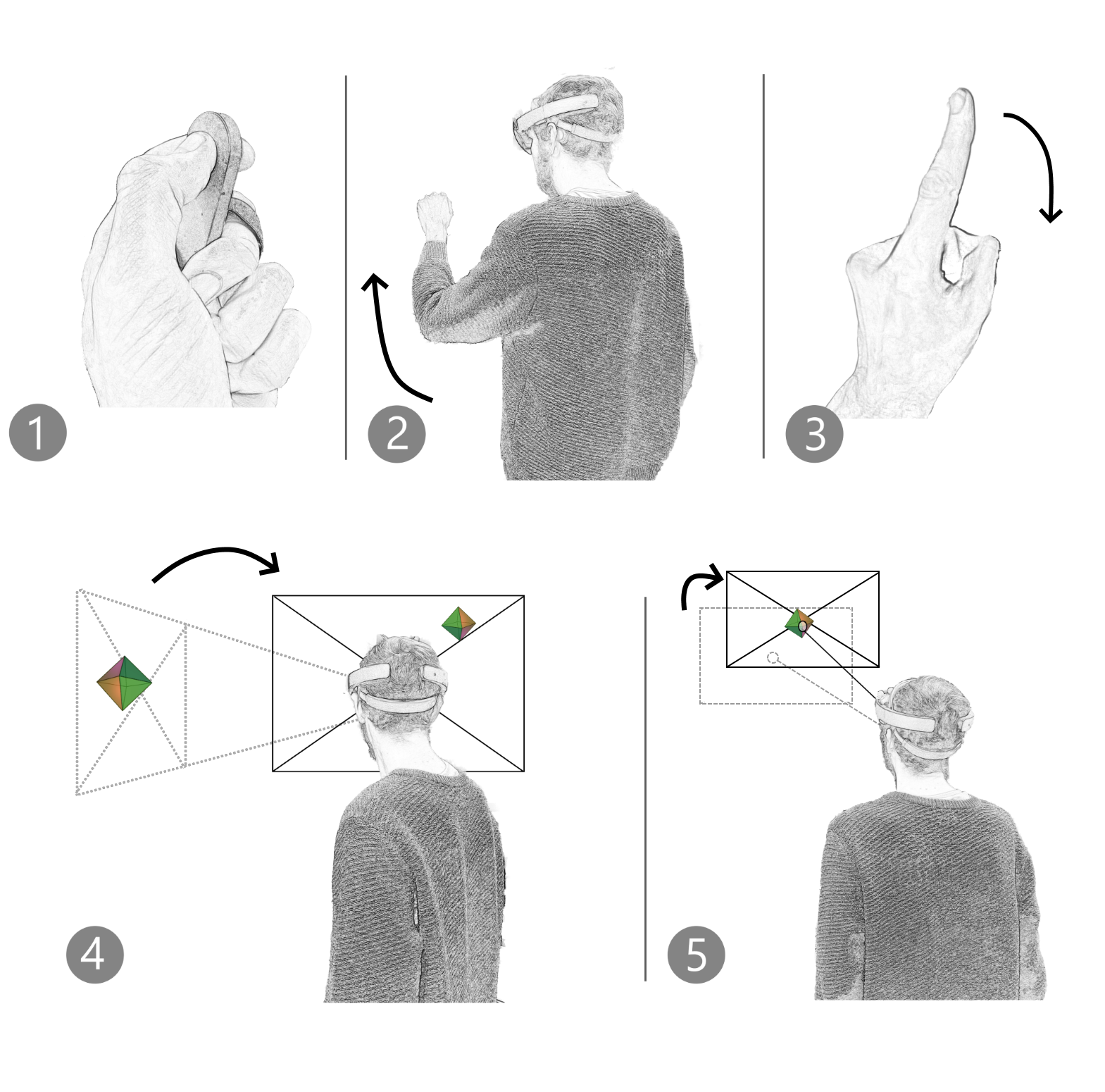

The design of immersive interaction for mixed reality head-mounted displays (HMDs) is still a tedious task which can hinder the advent of such devices. Indeed, the effects of the interface design on task performance are difficult to anticipate during the design phase: the spatial layout of virtual objects and the interaction techniques used to select those objects can all have an impact on task completion time. Besides, testing such interfaces with users in controlled experiments requires considerable time and efforts. To overcome this problem, predictive models such as the Keystroke Level Model (KLM) can be used to predict the time required to complete an interactive task at an early stage of the design process. However, so far these models have not been extended to consider the specific interaction techniques of immersive HMD mixed reality environments. In this paper we propose an extension of the KLM to model immersive mixed reality HMD-based interaction. First, we propose new operators and experimentally determine the unit times for each one of them. Then, we perform three experiments based on realistic interaction scenarios to consolidate our model. These experiments confirm the validity of our extension of KLM to predict interaction time in immersive HMD mixed reality.