Exploratory study of the design space for interactions with data located on physical objects (unpublished)

Abstract

The emergence of embedded technologies and the Internet of Things allows physical objects to be a source of data (e.g. temperature sensor in a radiator). Physical objects are endowed with digital capabilities to access and manipulate this data. They can become input devices (e.g. tangible interfaces) or output devices (e.g. data physicalization) bringing the physical world closer to the digital world. The use of the object or its immediate environment as a display surface (output devices) transforms the physical object into a physical referent (Willett et al. 2017). The data are thus closer to their source, allowing the user to better represent them in their context. However, the interaction techniques used to manipulate data displayed in their context must take into account a multitude of parameters inherent to the physical world. In this project, we present a design space to describe and formalize the factors involved in creating interaction techniques with data located close to or on their physical referent. This space will help designers identify the main interaction factors and thus accelerate the process of creating interactive visualization systems with situated data.

Introduction

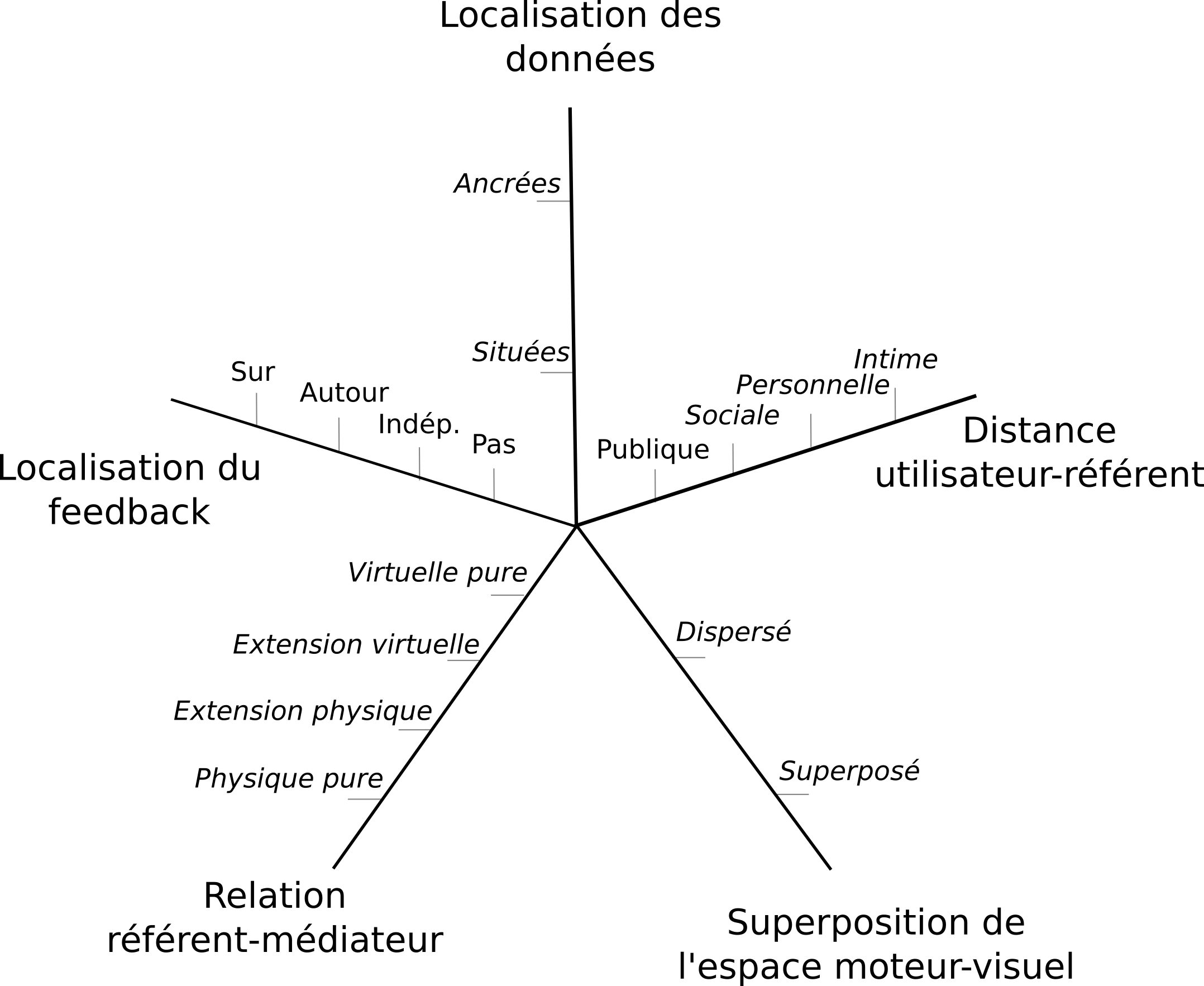

Since the emergence of new technologies (e.g. immersive headsets, embedded technologies, depth cameras, etc.), many solutions for interacting with the digital world are based on the use of everyday physical objects. In 1995, Fitzmaurice et al. (Fitzmaurice et al., 1995) already introduced the concept of a grapable user interface. This type of interface allows physical manipulation of digital artefacts. Subsequently, the paradigms of augmented reality and tangible interfaces have gradually spread. Technological advances now make them accessible in many domains (e.g. interaction in a public context (Ma et al.,2015), tourism (Cranmer,2019), data visualisation (Spindler et al. 2010)). More recently, depth cameras have facilitated the detection of physical objects, thus opening up new possibilities. For example, it has become possible to transform them into a display surface (Jones et al., 2014). This is spatial augmented reality (Bimber & Raskar, 2005), which makes it possible to anchor interaction more firmly in the physical world. In parallel, the democratisation of the Internet of Things (IoT) allows physical objects to carry their own data (e.g. the temperature of a radiator or the electricity consumption of an outlet). The physical object is therefore no longer just a tool for interacting with a digital world, but constitutes a real physical referent (Willett et al. 2017) for this data . This data must then be presented to users. The concept of situated data has been introduced to describe the spatial anchoring of the data representation to its physical referent. This representation opens up new research perspectives, particularly in the design of input and output interaction techniques. In order to rationalise the design of interaction techniques (input and output) for interactive situated data visualisation systems, it is important to identify the parameters of the physical world involved in the interaction. A design space that structures their description and facilitates their evaluation and comparison is therefore required. Among the design spaces that take into account the presence of the physical world, several aim at characterising the input interaction. Previous works have described, for example, the presence of a metaphor (Fishkin,2004), the input/output devices used (Ledermann & Schmalstieg, 2006), the relationship between physical objects (Shaer et al., 2004) or the place of the human body (Klemmer et al.,2006). Spaces for classifying data visualisation systems have considered how to decompose visualisations into a series of operations (Bach et al., 2014) , how to visualise graphs (Beck et al., 2014), the tools used to build these visualisations (Mei et al., 2018) or the technology used (Mwalongo et al., 2016). Willett et al (Willett et al., 2017) have classified situated data visualisation systems. However, to our knowledge, no design space has been proposed to simultaneously characterise the input and output interaction with interactive situated data visualisation systems. The design of an interaction technique is also influenced by the different renderings. These displays, depending on their shape and location, can, for example, lead to visual overload and must therefore be taken into account. Our design space for interaction with situated data is based on the visualisation space of Willett et al (Willett et al., 2017). Willett et al. propose 4 cases of representations of situated data close to a physical referent depending on: 1) form (physicalisation or visualisation) and 2) location (close or anchored). To our knowledge, if all these cases are populated by systems carrying situated data, not all of them are populated by interactive systems (e.g. allowing the user to manipulate or select data). Indeed, systems offering a physicalised representation of situated data close to or anchored on a physical referent do not offer interaction possibilities to users. Our design space will therefore only extend the dimension concerning the location of data. The extension of a visualisation space (output interaction) will allow the interactive system to be considered as a whole. An example will be given in section 4. In this project we propose a design space dedicated to interaction techniques with a physical referent and its situated data. Our design space is decomposed into 5 dimensions (see Figure):

- Data localisation characterises the rendering of situated data (Willett et al.,2017): the data is either situated close to the physical referent or anchored on the physical referent.

- User-referent distance is based on proxemic distances (Ballendat et al., 2010 ; Hall,1963) to identify the impact of the distance between the user and the physical referent.

- The motor-visual superposition characterises the continuity in the interaction between the user and the physical referent.

- The mediator-referent relationship expresses the degree of physicality of the input interaction between an input device, called the mediator, and the physical referent.

- The location of the interaction feedback characterises the place where this feedback is made perceptible.

References

(Willett et al.,2017) Wesley Willett, Yvonne Jansen, and Pierre Dragicevic. 2017. Embedded Data Representations. IEEE Transactions on Visualization and Computer Graphics 23, 1: 461–470. https://doi.org/10.1109/TVCG.2016.2598608

(Fitzmaurice et al.,1995) George W. Fitzmaurice, Hiroshi Ishii, and William A. S. Buxton. 1995. Bricks: Laying the Foundations for Graspable User Interfaces. In Proceedings of the SIGCHI conference on Human factors in computing systems - CHI ’95, 442–449. https://doi.org/10.1145/223904.223964

(Ma et al., 2015) Joyce Ma, Lisa Sindorf, Isaac Liao, and Jennifer Frazier. 2015. Using a Tangible Versus a Multi-touch Graphical User Interface to Support Data Exploration at a Museum Exhibit. 33–40. https://doi.org/10.1145/2677199.2680555

(Cranmer, 2019) Eleanor E. Cranmer. 2019. Designing Valuable Augmented Reality Tourism Application Experiences. . 73–87. https://doi.org/10.1007/978-3-030-06246-0_6

(Spindler et al. 2010) Martin Spindler, Christian Tominski, Heidrun Schumann, and Raimund Dachselt. 2010. Tangible views for information visualization. In ACM International Conference on Interactive Tabletops and Surfaces - ITS ’10, 157. https://doi.org/10.1145/1936652.1936684

(Jones et al., 2014) Brett Jones, Lior Shapira, Rajinder Sodhi, Michael Murdock, Ravish Mehra, Hrvoje Benko, Andrew Wilson, Eyal Ofek, Blair MacIntyre, and Nikunj Raghuvanshi. 2014. RoomAlive: Magical Experiences Enabled by Scalable, Adaptive Projector-Camera Units. In Proceedings of the 27th annual ACM symposium on User interface software and technology - UIST ’14, 637–644. https://doi.org/10.1145/2642918.2647383

(Bimber & Raskar, 2005) Oliver Bimber and Ramesh Raskar. 2005. Spatial Augmented Reality Merging Real and Virtual Worlds. 6: 83–92.

(Fishkin,2004) Kenneth P. Fishkin. 2004. A taxonomy for and analysis of tangible interfaces. Personal and Ubiquitous Computing 8, 5: 347–358. https://doi.org/10.1007/s00779-004-0297-4

(Ledermann & Schmalstieg, 2006) F. Ledermann and D. Schmalstieg. 2006. APRIL A High-Level Framework for Creating Augmented Reality Presentations. In IEEE Virtual Reality Conference 2005 (VR’05), 187–194. https://doi.org/10.1109/VR.2005.8

(Shaer et al., 2004) Orit Shaer, Nancy Leland, Eduardo H. Calvillo-Gamez, and Robert J.K. Jacob. 2004. The TAC paradigm: Specifying tangible user interfaces. Personal and Ubiquitous Computing 8, 5: 359–369. https://doi.org/10.1007/s00779-004-0298-3

(Klemmer et al.,2006) Scott R Klemmer, Björn Hartmann, and Leila Takayama. 2006. How bodies matter: Five themes for interaction design. Proceedings of the 6th ACM conference on Designing Interactive systems - DIS ’06: 140–149. https://doi.org/10.1145/1142405.1142429

(Bach et al., 2014) B. Bach, P. Dragicevic, D. Archambault, C. Hurter, and S. Carpendale. 2014. A Review of Temporal Data Visualizations Based on Space-Time Cube Operations. Eurographics Conference on Visualization (EuroVis) 00, 00: 23–41. https://doi.org/10.1111/cgf.12804View

(Beck et al., 2014)Fabian Beck, Michael Burch, Stephan Diehl, and Daniel Weiskopf. 2014. The State of the Art in Visualizing Dynamic Graphs. Proceedings State of the Art Reports (STARs): 83–103. https://doi.org/10.2312/eurovisstar.20141174

(Mei et al., 2018)Honghui Mei, Yuxin Ma, Yating Wei, and Wei Chen. 2018. The design space of construction tools for information visualization: A survey. Journal of Visual Languages & Computing 44: 120–132. https://doi.org/10.1016/j.jvlc.2017.10.001

(Mwalongo et al., 2016) F. Mwalongo, M. Krone, G. Reina, and T. Ertl. 2016. State-of-the-Art Report in Web-based Visualization. Computer Graphics Forum 35, 3: 553–575. https://doi.org/10.1111/cgf.12929

(Ballendat et al., 2010) Till Ballendat, Nicolai Marquardt, and Saul Greenberg. 2010. Proxemic Interaction: Designing for a Proximity and Orientation-Aware Environment. In ACM International Conference on Interactive Tabletops and Surfaces - ITS ’10, 121. https://doi.org/10.1145/1936652.1936676

(Hall,1963)Edward T Hall. 1963. A System for the Notation of Proxemic Behavior. American Anthropologist 65, 5: 1003–1026. https://doi.org/10.1525/aa.1963.65.5.02a00020